I've been slipping back into coding after putting all of my focus on my secret writing project for a few months[1] both as a streamer (who panicked and quit after I noticed someone was watching for the first time) and as my usual hacky-slacky self.

I was kicking off a small VR experiment today and wanted to build something with HMD and simple hand tracking but without including a whole bunch of Oculus stuff. This is a fairly small, and rudimentary tutorial but most of the information about setting up a project with just the most basic tools are tucked away in YouTube tutorials and while I know lots of people find it easiest to pick something up in videos I'm a big fan of the written word.

If you just want the code though, I put it all into a release here so I can continue messing around with that project.

Here's a little mini-guide here in lieu of responsible things like commenting the code. The goal was to avoid using any headset specific code but because I only have a Rift I can only confirm that it works with a Rift.

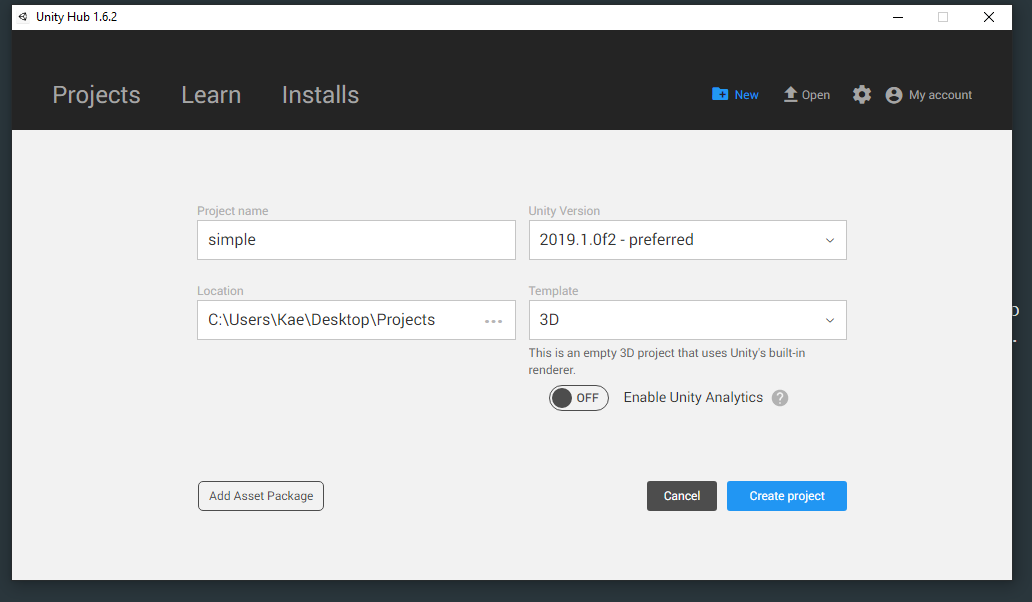

First, I create a new project with the 3D template:

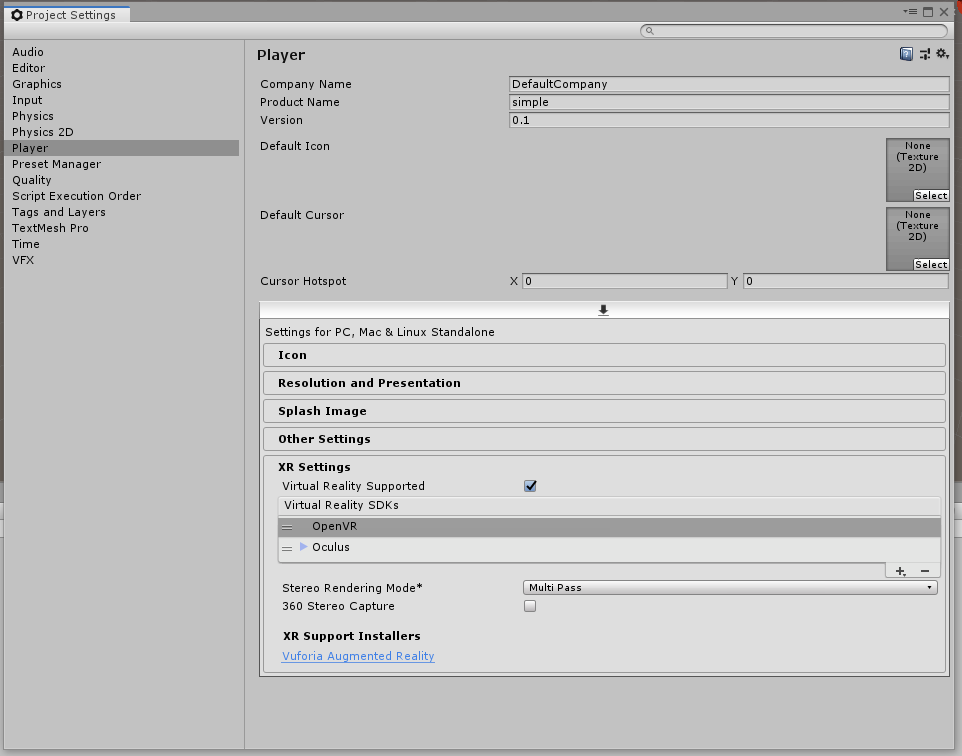

Next, I go to the 'Edit' menu and select 'Project Settings'. I select the 'Player' tab and check off 'Virtual Reality Supported':

At this point if my Rift is connected and functioning properly when I hit the preview in Unity button the main camera should be attached to my HMD and I can look around the project.

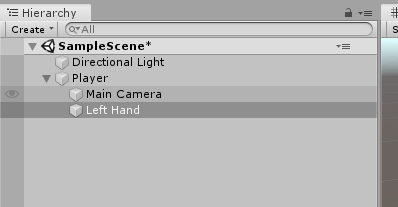

For the hands I'll first create an empty and make the camera a child as well as a sphere mesh that I'll name 'Left Hand':

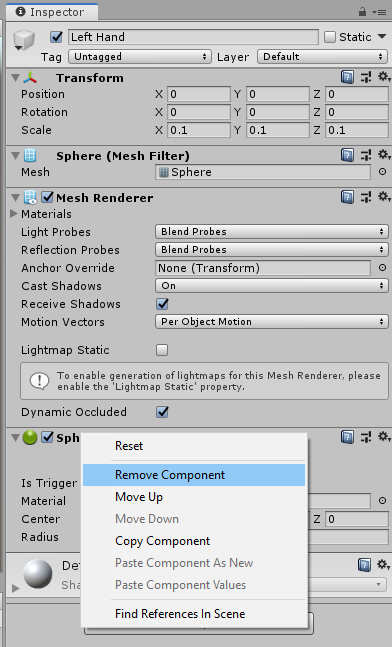

Then I removed the collider from the Left Hand -- you can optionally scale the Game Object down as well, I found that 0.1 was a good size for hands:

Now, you'll want to make a new script, I called mine Tracking.cs. Make sure that you have Unity's XR tools included by using UnityEngine.XR; You can then delete the "Start()" method because we won't be needing that. The whole script looks like this:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.XR;

public class Tracking : MonoBehaviour {

// Unity has some built in means to detect what sort of input device you're using

public XRNode NodeType;

void Update() {

// Set the Transform's position and rotation based on the orientation of the motion controller

transform.localPosition = InputTracking.GetLocalPosition(NodeType);

transform.localRotation = InputTracking.GetLocalRotation(NodeType);

}

}

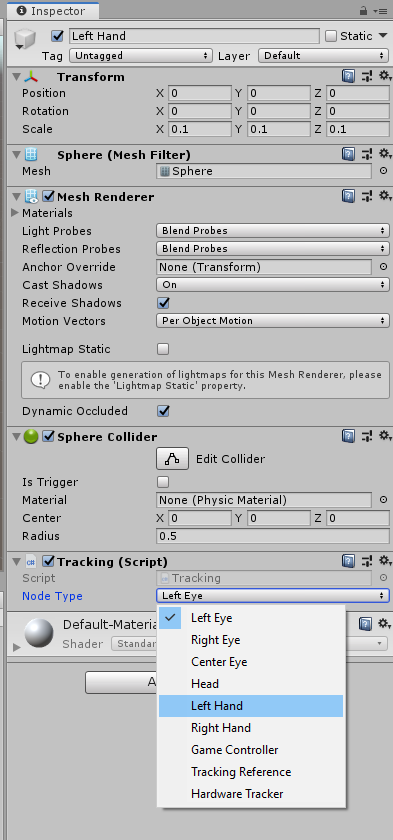

Click and drag the script you just wrote onto the Left Hand mesh, and set the public NodeType on the script to Left Hand:

If you duplicate the 'Left Hand' we've already made, rename it 'Right Hand' and set the NodeType to 'Right Hand' when you hit play you should see two spheres tracking to your hands.

If things look or track weird for whatever reason try resetting the transforms of your game objects, if the hands start clipping really far from your face change the Clipping Planes on the camera.

And that's how to do it. I'll come back and revise this later as it's a "write while I do" project but hopefully there's some helpful information.

It's still ongoing. ↩︎